March 5, 4:58 AM

I am dealing with the problems mentioned in the last post. There are several fatalities in training, but i have been successful in weeding them out using the following piece of code:

qpipe = os.popen4(exec_string1)

o=qpipe[1].readlines() #returning your output.

pos=str(o).find('FAILURE')

#print len(str(o))print pos

if(pos >0):

#os.chdir("images")

fileout=open("error",'a')

filein=open("beng.images/"+box,'r')

linein=filein.readline()

fileout.write(linein+"\n")

filein.close()

fileout.close()

It parses the output of the string executed by popen4() and looks for characters

that failed while training. It writes those characters in a separate file.

I just need to work on generating one set of really good and flawless training data.

March 4, 5:07 AM

Its been more than a month since i recorded any of my work here, but I *have* been working and there are lots of updates.

We now have 3 project members. Jinesh from IIIT Hyderabad wishes to add Malayalam support to TesseractIndic. Baali (Shantanu) from Sarai, Delhi wishes to add Devanagri support. So finally am not working alone.

Also, around a month back I had gone to ISI Kolkata to consult with Prof. B.B. Choudhury. I had mailed Dr. B.B. Chaudhuri of ISI Kolkata to help

me out regarding training data and testing ground truth data and thanx

to Mr. Gora’s recommendation, he allowed me to meet him in Kolkata. I

met him at ISI and spoke to him for about 40 minutes regarding

different issues in Indic OCR. He discussed some really good ways to

significantly reduce recognition time etc, and rued the lack of good

research assistants.

He could not share data with me at that moment because of lack of

manpower and copyright issues. I was returning dejected, but I met Dr.

Mandar Mitra at the gates. Mr. Sankarshan, my mentor who originally

started me down this path, had introduced me to him in Kolkata about a

month back. It really helped and he took me to his lab. He mined last 7

8 years his work and gave me everythin useful he had, includeing a lot

of ground truth data and some images with bounding boxes information.

But that is not the most important thing i acquired there. While

talking to Mandar Mitra, it dawned on us that the entire training

process can be automated using python scripts, and there is no need of

manually feeding data using scanners and all.

The principle on which this works is this: Tesseract needs two things to train itself, 1) An image of the character 2) The name of the character. This information is provided with the help of "box files". A box file contains the co-ordinates of the bounding boxes around characters with labels as to what those characters are. The traditional method of training the engine is to take a scanned image, meticulously create a box file using some tool such as tesseractrainer.py , edit the box file, and keep doing the same for several other images and fonts. This process was tedious enough to force me to seek new methods.

Now lets do a little reverse engineering. What if we could take a list of characters in a text file, "generate" an image out of those characters, store the co-ordinates of the bounding boxes of those generated images in a file and then feed these to the OCR engine? It would work, right?

Links:

- http://tesseractindic.googlecode.com/files/tesseract_trainer.beta.tar.gz - The tar ball itself

- http://code.google.com/p/tesseractindic/source/browse/trunk/tesseract_trainer/readme - The readme file

- http://www.youtube.com/watch?v=vuuVwm5ZjkI - YouTube video of the tool working for Bengali

But there are problems. Tesseract-OCR has its quirks.

Tesseract wants one bounding box to enclose a single "blob" only. A blob is a wholly connected component. So ক is a blob, and ক খ are two blobs. There are cases where a consonant+vowel sign generates two blobs, for example the 3 images below have multiple blobs:

And hence Tesseract throws a "FATALITY" error during training.

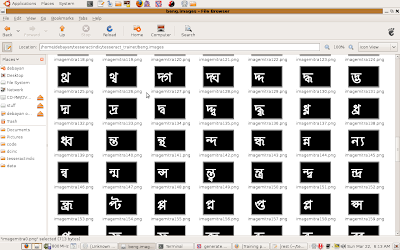

So i had to change my approach a little bit. Obviously there has to be some feedback mechanism where i parse the output of Tesseract during training to see if a particular set of characters threw errors. Once I know what they are, I can separate them and train them later. To accomplish this, I changed my approach of generating a strip of character images to generating just one image per character, so I can pin point the problems better.

The downside, too many images getting generated. To train a simple font it generates 405 images+405 box files+405 tr files. And all this when I have not included conjuncts yet. It is not much of a problem though, since the images generated are not required once the training files have been generated.

Well it leads me to new challenges. I remember Prof B.B. Choudhury saying that training all the conjuncts will kill any recogniser, ie, it will work very slowly while recognising. He also told me some cool ways to get past that. May have to implement that. Lets see.

And yeah, Sarai cheque arrived. :)

January 29, 3:16 AM

for akshar in alphabets:

#print akshar

draw.text((x, y), unicode(akshar,'UTF-8'), font=font)

leftx=x-20#the left end of the small bounding box

box=(leftx,y,x+100,y+60) #the box in the big image within which the small image of interest lies

sub_im=im.crop(box)

#sub_im.show()

bbox_sub=sub_im.getbbox() #get the bounding box of the black pixels in the sub image, not the big image

bbox_im=(leftx+bbox_sub[0],y+bbox_sub[1],leftx+bbox_sub[2],y+bbox_sub[3]) #calculate relative to the big image

draw.rectangle(bbox_im)

print bbox_im

This block of code was instrumental in giving this:

January 25, 4:54 PM

I am so excited.

#!/usr/local/bin/python

#-*- coding:utf8 -*-

importImageFont,ImageDraw

fromPILimport Image

im = Image.new("RGB",(400,400))

#im.show()

draw = ImageDraw.Draw(im)

# use a truetype font

font= ImageFont.truetype("/usr/share/fonts/truetype/ttf-bengali-fonts/lohit_bn.ttf",50)

txt1="ক"

txt2=" ি"

txt=txt2+txt1

draw.text((10, 10), unicode(txt,'UTF-8'), font=font)

im.show()

generated:

That means the *entire* training+testing process can be automated :) :) :)

Damn, I dint even have to go to ISI. Ofcourse, going to ISI was quite an experience in itself.

January 10, 2009

08:57 PM

Forwarded conversation

Subject: Regarding Bangla training data

------------------------

From: Debayan Banerjee<debayanin@gmail.com>

Date: 2009/1/9

To: mhasnat@gmail.com

Hi,

I was going through your work on ocropus and your training data. I have a few questions:

- Can you share with me your training data?

- Have you trained only for Solaiman lipi font?

- The maatraa-clipping code in lua, what is the logic/pseudocode?

- What is the performance of the lua scripts on the 18 test images?

Eagerly waiting for your reply.

~Debayan

--

BE INTELLIGENT, USE GNU/LINUX

http://lug.nitdgp.ac.in

http://mukti09.in

http://planet-india.randomink.org

----------

From: Hasnat<mhasnat@gmail.com>

Date: 2009/1/10

To: Debayan Banerjee <debayanin@gmail.com>

Dear Debayan,

sorry for my late reply because of my traveling from Bangladesh to

outside. By training data do you mean it for OCROpus or tesseract? For

OCROpus we have created training data which was a complex process. I

worked on that few months ago to test the training procedure working

for Bangla script. I observed that this is quite complex process to

prepare training data. Few more work need to be done to complete this.

However, we (me and shouro) have tested basic training procedure and

observed the performance which seems satisfactory to me but very

sensitive. To make that training data very effective we had to collect

a large amount of data and train. As the segmentation algorithm was not

completed at that time as well as OCROpus was changing its procedures

continuously, so I left that task until the next stable version of

OCROpus (0.3). Now we again start looking at that and just finished the

basic compilation and checking other procedure to integration. So,

honestly there is no training data for version 0.3.

We are considering SuttunyMJ font for training which is the most widely used font in the Bangla documents.

I

didn't integrate any Matraa clipping code in Lua script. Rather I was

focusing on embedding our own procedures with C++ files which is not

completed yet. Matraa clipping is a big problem what I observed if you

follow the general procedures. I have tested three different methods

andobserved that nothing is giving 100% accuracy for all type of

documents. I think its a big deal to solve yet.

I have tested the images for tesseract. The test images is not

following the training document font size and type. Hence for different

images we are getting different results. From the feedback of different

people at the end user level I have the realization that we have to

work more for a market place standard OCR.

I will return back to my country at the end of this month and start

working on OCR. Then can concentrate on these issues and hopeful to

find out solutions. As we have implemented the complete framework so it

will be easier for us to solve the particular problems. Please do share

your work with us and you find our work on the web link.Regards,

--

Hasnat

Center for Research on Bangla Language Processing (CRBLP)

http://mhasnat.googlepages.com/

December 22

05:07 hrs.

This is the lua script in ocropus 0.3 release that deskews a page image. It did not work for me. Kept giving this error:

ocroscript: ocroscript/scripts/deskew.lua:9: attempt to call global 'make_DeskewPageByRAST' (a nil value)

stack traceback:

ocroscript/scripts/deskew.lua:9: in main chunk

[C]: ?

I used google, and found this. It worked well. The code is:

-proc = make_DeskewPageByRAST()

+proc = ocr.make_DeskewPageByRAST()

input = bytearray:new()

output = bytearray:new()

-read_image_gray(input,arg[1])

+iulib.read_image_gray(input,arg[1])

proc:cleanup(output,input)

-write_png(arg[2],output)

Result is:

October 28

My work till date:

Author: debayanin

Date: Mon Oct 27 16:41:10 2008

New Revision: 8

Modified:

trunk/ccmain/baseapi.cpp

Log:

auto-indented baseapi.cpp

Modified: trunk/ccmain/baseapi.cpp

==============================

================================================

--- trunk/ccmain/baseapi.cpp (original)

+++ trunk/ccmain/baseapi.cpp Mon Oct 27 16:41:10 2008

@@ -409,161 +409,161 @@

////////////DEBAYAN//Deskew begins//////////////////////

void deskew(float angle,int srcheight, int srcwidth)

{

-//angle=4; //45° for example

-IMAGE tempimage;

-

-

-IMAGELINE line;

-//Convert degrees to radians

-float radians=(2*3.1416*angle)/360;

-

-float cosine=(float)cos(radians);

-float sine=(float)sin(radians);

-

-float Point1x=(srcheight*sine);

-float Point1y=(srcheight*cosine);

-float Point2x=(srcwidth*cosine-srcheight*sine);

-float Point2y=(srcheight*cosine+srcwidth*sine);

-float Point3x=(srcwidth*cosine);

-float Point3y=(srcwidth*sine);

-

-float minx=min(0,min(Point1x,min(Point2x,Point3x)));

-float miny=min(0,min(Point1y,min(Point2y,Point3y)));

-float maxx=max(Point1x,max(Point2x,Point3x));

-float maxy=max(Point1y,max(Point2y,Point3y));

-

-int DestWidth=(int)ceil(fabs(maxx)-minx);

-int DestHeight=(int)ceil(fabs(maxy)-miny);

-

-tempimage.create(DestWidth,DestHeight,1);

-line.init(DestWidth);

-

-for(int i=0;i<DestWidth;i++){ //A white line of length=DestWidth

-line.pixels[i]=1;

-}

-

-for(int y=0;y<DestHeight;y++){ //Fill the Destination image with white, else clipmatra wont work

-tempimage.put_line(0,y,DestWidth,&line,0);

-}

-line.init(DestWidth);

-

-

-

-for(int y=0;y<DestHeight;y++) //Start filling the destination image pixels with corresponding source image pixels

-{

- for(int x=0;x<DestWidth;x++)

- {

- int Srcx=(int)((x+minx)*cosine+(y+miny)*sine);

- int Srcy=(int)((y+miny)*cosine-(x+minx)*sine);

- if(Srcx>=0&&Srcx<srcwidth&&Srcy>=0&&

- Srcy<srcheight)

- {

- line.pixels[x]=

- page_image.pixel(Srcx,Srcy);

- }

- }

- tempimage.put_line(0,y,DestWidth,&line,0);

-}

-

-//tempimage.write("tempimage.tif");

-page_image=tempimage;//Copy deskewed image to global page image, so it can be worked on further

-tempimage.destroy();

-//page_image.write("page_image.tif");

-

+ //angle=4; //45° for example

+ IMAGE tempimage;

+

+

+ IMAGELINE line;

+ //Convert degrees to radians

+ float radians=(2*3.1416*angle)/360;

+

+ float cosine=(float)cos(radians);

+ float sine=(float)sin(radians);

+

+ float Point1x=(srcheight*sine);

+ float Point1y=(srcheight*cosine);

+ float Point2x=(srcwidth*cosine-srcheight*sine);

+ float Point2y=(srcheight*cosine+srcwidth*sine);

+ float Point3x=(srcwidth*cosine);

+ float Point3y=(srcwidth*sine);

+

+ float minx=min(0,min(Point1x,min(Point2x,Point3x)));

+ float miny=min(0,min(Point1y,min(Point2y,Point3y)));

+ float maxx=max(Point1x,max(Point2x,Point3x));

+ float maxy=max(Point1y,max(Point2y,Point3y));

+

+ int DestWidth=(int)ceil(fabs(maxx)-minx);

+ int DestHeight=(int)ceil(fabs(maxy)-miny);

+

+ tempimage.create(DestWidth,DestHeight,1);

+ line.init(DestWidth);

+

+ for(int i=0;i<DestWidth;i++){ //A white line of length=DestWidth

+ line.pixels[i]=1;

+ }

+

+ for(int y=0;y<DestHeight;y++){ //Fill the Destination image with white, else clipmatra wont work

+ tempimage.put_line(0,y,DestWidth,&line,0);

+ }

+ line.init(DestWidth);

+

+

+

+ for(int y=0;y<DestHeight;y++) //Start filling the destination image pixels with corresponding source image pixels

+ {

+ for(int x=0;x<DestWidth;x++)

+ {

+ int Srcx=(int)((x+minx)*cosine+(y+miny)*sine);

+ int Srcy=(int)((y+miny)*cosine-(x+minx)*sine);

+ if(Srcx>=0&&Srcx<srcwidth&&Srcy>=0&&

+ Srcy<srcheight)

+ {

+ line.pixels[x]=

+ page_image.pixel(Srcx,Srcy);

+ }

+ }

+ tempimage.put_line(0,y,DestWidth,&line,0);

+ }

+

+ //tempimage.write("tempimage.tif");

+ page_image=tempimage;//Copy deskewed image to global page image, so it can be worked on further

+ tempimage.destroy();

+ //page_image.write("page_image.tif");

+

}

/////////////DEBAYAN//Deskew ends/////////////////////

////////////DEBAYAN//Find skew begins/////////////////

float findskew(int height, int width)

{

-int topx=0,topy=0,sign,count=0,offset=1,ifcounter=0;

-float slope=-999,avg=0;

-IMAGELINE line;

-line.init(1);

-line.pixels[0]=0;

-///////Find the top most point of the page: begins///////////

-for(int y=height-1;y>0;y--){

- for(int x=width-1;x>0;x--){

- if(page_image.pixel(x,y)==0){

- topx=x;topy=y;

- break;

- }

-

- }

-

- if(topx>0){break;};

-}

-///////Find the top most point of the page: ends///////////

-

-

-///////To find pages with no skew: begins//////////////

-int c1,c2=0;

-for(int x=1;x<.25*width;x++){

- while(page_image.pixel((width/2)+x,c1++)==1){ }

- while(page_image.pixel((width/2)-x,c2++)==1){ }

- if(c1==c2){cout<<"0 ANGLE\n";return (0);}

- c1=c2=0;

-}

-///////To find pages with no skew: ends//////////////

-

-cout<<"width="<<width;

-if(topx>0 && topx<.5*width){

- sign=1;

-}

-if(topx>0 && topx>.5*width){

- sign=-1;

-}

-

-

-if(sign==-1){

- while((topx-offset)>width/2){

- while(page_image.pixel(topx-offset,topy-count)==1){

- //page_image.put_line(topx-offset,topy-count,1,&line,0);

- count++;

- }

-

- if((180/3.142)*atan((float)count/offset)<10){

- slope=(float)count/offset;

- ifcounter++;

- avg=(avg+slope);

- }

- count=0;

- offset++;

- }

- avg=(float)avg/ifcounter;

- //cout<<"avg="<<avg<<"\n";

- page_image.write("findskew.tif");

- //cout<<"(180/3.142)*atan((float)(count/offset)="<<(180/3.142)*atan(avg)<<"\n";

- return (sign*(180/3.142)*atan(avg));

-

-}

-if(sign==1){

- while((topx+offset)<width/2){

- while(page_image.pixel(topx+offset,topy-count)==1){

- //page_image.put_line(topx+offset,topy-count,1,&line,0);

- count++;

- }

-

- if((180/3.142)*atan((float)count/offset)<10){

- slope=(float)count/offset;

- ifcounter++;

- avg=(avg+slope);

- }

- count=0;

- offset++;

- }

- avg=(float)avg/ifcounter;

- //cout<<"avg="<<avg<<"\n";

- page_image.write("findskew.tif");

- //cout<<"(180/3.142)*atan((float)(count/offset)="<<(180/3.142)*atan(avg)<<"\n";

- return (sign*(180/3.142)*atan(avg));

-

-}

-

-if(sign==0)

-{return 0;}

-cout<<"SHIT";

-return (0);

+ int topx=0,topy=0,sign,count=0,offset=1,ifcounter=0;

+ float slope=-999,avg=0;

+ IMAGELINE line;

+ line.init(1);

+ line.pixels[0]=0;

+ ///////Find the top most point of the page: begins///////////

+ for(int y=height-1;y>0;y--){

+ for(int x=width-1;x>0;x--){

+ if(page_image.pixel(x,y)==0){

+ topx=x;topy=y;

+ break;

+ }

+

+ }

+

+ if(topx>0){break;};

+ }

+ ///////Find the top most point of the page: ends///////////

+

+

+ ///////To find pages with no skew: begins//////////////

+ int c1,c2=0;

+ for(int x=1;x<.25*width;x++){

+ while(page_image.pixel((width/2)+x,c1++)==1){ }

+ while(page_image.pixel((width/2)-x,c2++)==1){ }

+ if(c1==c2){cout<<"0 ANGLE\n";return (0);}

+ c1=c2=0;

+ }

+ ///////To find pages with no skew: ends//////////////

+

+ cout<<"width="<<width;

+ if(topx>0 && topx<.5*width){

+ sign=1;

+ }

+ if(topx>0 && topx>.5*width){

+ sign=-1;

+ }

+

+

+ if(sign==-1){

+ while((topx-offset)>width/2){

+ while(page_image.pixel(topx-offset,topy-count)==1){

+ //page_image.put_line(topx-offset,topy-count,1,&line,0);

+ count++;

+ }

+

+ if((180/3.142)*atan((float)count/offset)<10){

+ slope=(float)count/offset;

+ ifcounter++;

+ avg=(avg+slope);

+ }

+ count=0;

+ offset++;

+ }

+ avg=(float)avg/ifcounter;

+ //cout<<"avg="<<avg<<"\n";

+ page_image.write("findskew.tif");

+ //cout<<"(180/3.142)*atan((float)(count/offset)="<<(180/3.142)*atan(avg)<<"\n";

+ return (sign*(180/3.142)*atan(avg));

+

+ }

+ if(sign==1){

+ while((topx+offset)<width/2){

+ while(page_image.pixel(topx+offset,topy-count)==1){

+ //page_image.put_line(topx+offset,topy-count,1,&line,0);

+ count++;

+ }

+

+ if((180/3.142)*atan((float)count/offset)<10){

+ slope=(float)count/offset;

+ ifcounter++;

+ avg=(avg+slope);

+ }

+ count=0;

+ offset++;

+ }

+ avg=(float)avg/ifcounter;

+ //cout<<"avg="<<avg<<"\n";

+ page_image.write("findskew.tif");

+ //cout<<"(180/3.142)*atan((float)(count/offset)="<<(180/3.142)*atan(avg)<<"\n";

+ return (sign*(180/3.142)*atan(avg));

+

+ }

+

+ if(sign==0)

+ {return 0;}

+ cout<<"SHIT";

+ return (0);

}

////////////DEBAYAN//Find skew ends///////////////////

@@ -573,101 +573,101 @@

//used only if the language belongs to devnagri, eg, ben, hin etc.

void TessBaseAPI::ClipMaatraa(int height, int width)

{

-IMAGELINE line;

-line.init(width);

-int count,count1=0,blackpixels[height-1][2],arr_row=0,maxbp=0,maxy=0,matras[100][3],char_height;

-//cout<<"Connected Script="<<connected_script<<"\n";

-

-for(int y=0; y<height-1;y++){

- count=0;

- for(int x=0;x<width-1;x++){

- if(page_image.pixel(x,y)==0)

- {count++;}

- }

-

- if(count>=.05*width){

- blackpixels[arr_row][0]=y;

- blackpixels[arr_row][1]=count;

- arr_row++;

- }

-}

-blackpixels[arr_row][0]=blackpixels[arr_row][1]='\0';

-

-for(int x=0;x<width-1;x++){ //Black Line

- line.pixels[x]=0;

-}

-

-////////////line_through_matra() begins//////////////////////

-count=1;

-//cout<<"\nHeight="<<height<<" arr_row="<<arr_row<<"\n";

-char_height=blackpixels[0][0]; //max character height per sentence

-//cout<<"Char Height Init="<<char_height;

-while(count<=arr_row){

- //if(count==0){max=blackpixels[count][0];}

- if((blackpixels[count][0]-blackpixels[count-1][0]==1) && (blackpixels[count][1]>=maxbp)){

- maxbp=blackpixels[count][1];

- maxy=blackpixels[count][0];

- //cout<<"\nMax="<<maxy<<" bpc="<<maxbp;

- }

-

- if((blackpixels[count][0]-blackpixels[count-1][0])!=1){

- /////////////drawline(max)//////////////////////

-

- // cout<<"\nmax="<<maxy<<" bpc="<<maxbp;

-// page_image.put_line(0,maxy,width,&line,0);

- char_height=blackpixels[count-1][0]-char_height;

- matras[count1][0]=maxy; matras[count1][1]=maxbp; matras[count1][2]=char_height; count1++;

- char_height=blackpixels[count][0];

-

- //////////// drawline(max)/////////////////////

- maxbp=blackpixels[count][1];

- }

- count++;

- }

-matras[count1][0]=matras[count1][1]=matras[count1][2]='\0';

-

-//delete blackpixels;

-////////////line_through_matra() ends//////////////////////

-

- ////////////clip_matras() begins///////////////////////////

- for(int i=0;i<100;i++){ //where 100=max number of sentences per page

- if(matras[i][0]=='\0'){break;}

- //cout<<"\nY="<<matras[i][0]<<" bpc="<<matras[i][1]<<" chheight="<<matras[i][2];

- count=i;

-}

-

-for(int i=0;i<=count;i++){

-

- for(int x=0;x<width-1;x++){

- if(page_image.pixel(x,matras[i][0])==0){

- count1=0;

- for(int y=0;y<matras[i][2] && count1==0;y++){

- if(page_image.pixel(x,matras[i][0]-y)==1){count1++;

- for(int z=y+1;z<matras[i][2];z++){

- if(page_image.pixel(x,matras[i][0]-z)==1){count1++;}//black pixel encountered... stop counting.

- else

- {break;}

- }

- }

- }

- //cout<<"\nWPR @ "<<x<<","<<matras[i][0]<<"="<<count1;

- if(count1>.8*matras[i][2]){

- line.init(matras[i][2]+5);

- for(int j=0;j<matras[i][2]+5;j++){line.pixels[j]=1;}

- page_image.put_column(x,matras[i][0]-matras[i][2],matras[i][2]+5,&line,0);

- }

- }

- }

-

-}

-

-page_image.write("bentest.tif");

-

+ IMAGELINE line;

+ line.init(width);

+ int count,count1=0,blackpixels[height-1][2],arr_row=0,maxbp=0,maxy=0,matras[100][3],char_height;

+ //cout<<"Connected Script="<<connected_script<<"\n";

+

+ for(int y=0; y<height-1;y++){

+ count=0;

+ for(int x=0;x<width-1;x++){

+ if(page_image.pixel(x,y)==0)

+ {count++;}

+ }

+

+ if(count>=.05*width){

+ blackpixels[arr_row][0]=y;

+ blackpixels[arr_row][1]=count;

+ arr_row++;

+ }

+ }

+ blackpixels[arr_row][0]=blackpixels[arr_row][1]='\0';

+

+ for(int x=0;x<width-1;x++){ //Black Line

+ line.pixels[x]=0;

+ }

+

+ ////////////line_through_matra() begins//////////////////////

+ count=1;

+ //cout<<"\nHeight="<<height<<" arr_row="<<arr_row<<"\n";

+ char_height=blackpixels[0][0]; //max character height per sentence

+ //cout<<"Char Height Init="<<char_height;

+ while(count<=arr_row){

+ //if(count==0){max=blackpixels[count][0];}

+ if((blackpixels[count][0]-blackpixels[count-1][0]==1) && (blackpixels[count][1]>=maxbp)){

+ maxbp=blackpixels[count][1];

+ maxy=blackpixels[count][0];

+ //cout<<"\nMax="<<maxy<<" bpc="<<maxbp;

+ }

+

+ if((blackpixels[count][0]-blackpixels[count-1][0])!=1){

+ /////////////drawline(max)//////////////////////

+

+ // cout<<"\nmax="<<maxy<<" bpc="<<maxbp;

+ // page_image.put_line(0,maxy,width,&line,0);

+ char_height=blackpixels[count-1][0]-char_height;

+ matras[count1][0]=maxy; matras[count1][1]=maxbp; matras[count1][2]=char_height; count1++;

+ char_height=blackpixels[count][0];

+

+ //////////// drawline(max)/////////////////////

+ maxbp=blackpixels[count][1];

+ }

+ count++;

+ }

+ matras[count1][0]=matras[count1][1]=matras[count1][2]='\0';

+

+ //delete blackpixels;

+ ////////////line_through_matra() ends//////////////////////

+

+ ////////////clip_matras() begins///////////////////////////

+ for(int i=0;i<100;i++){ //where 100=max number of sentences per page

+ if(matras[i][0]=='\0'){break;}

+ //cout<<"\nY="<<matras[i][0]<<" bpc="<<matras[i][1]<<" chheight="<<matras[i][2];

+ count=i;

+ }

+

+ for(int i=0;i<=count;i++){

+

+ for(int x=0;x<width-1;x++){

+ if(page_image.pixel(x,matras[i][0])==0){

+ count1=0;

+ for(int y=0;y<matras[i][2] && count1==0;y++){

+ if(page_image.pixel(x,matras[i][0]-y)==1){count1++;

+ for(int z=y+1;z<matras[i][2];z++){

+ if(page_image.pixel(x,matras[i][0]-z)==1){count1++;}//black pixel encountered... stop counting.

+ else

+ {break;}

+ }

+ }

+ }

+ //cout<<"\nWPR @ "<<x<<","<<matras[i][0]<<"="<<count1;

+ if(count1>.8*matras[i][2]){

+ line.init(matras[i][2]+5);

+ for(int j=0;j<matras[i][2]+5;j++){line.pixels[j]=1;}

+ page_image.put_column(x,matras[i][0]-matras[i][2],matras[i][2]+5,&line,0);

+ }

+ }

+ }

+

+ }

+

+ page_image.write("bentest.tif");

+

////////////clip_matras() ends/////////////////////////////

-

-/////////DEBAYAN/////////////////

-

-

+

+ /////////DEBAYAN/////////////////

+

+

}

October 22

Its 4:45 AM.

Task number one for Indic OCR workout participants at foss.in 2008

Implement deskewing (basically straightening a tilted image) code in any language of choice. The algorithm may be any good standard one of your choice. The image to be tested on is this.

Then mail to me at debayanin AT gmail DOT com , or on any mailing list.

I think hough transforms would be the best way. I have been facing some difficulty in implementing this in python for the test images, but the theory is sound and will ultimately give good results.

October 14 2008

Its 5:05 AM. Feels so nice to revisit this page after 4 long months. Have seen a lot in these 4 months. hmm....

So all stuff added to this page will act as a reference for the workout proposed at foss.in 2008, and also the work for Sarai FLOSS fellowship (which i am not sure about yet).

Note: TesseractIndic is Tesseract-OCR with Indic script support. This will remain a separate project untill Tesseract-OCR actually decides to accept patches and merge Indic script support. TesseractIndic can be found here.

So lets see where we stand. We have Tesseract-OCR, which works great for english. I managed to apply "maatraaclipping" (which is a new term/approach in the world of OCR i think!) successfully as a proof of concept to the image being fed to the Tesseract OCR engine. Accuracy obtained by this method, along with some really crappy training, stands at about 85%.

A standard OCR process contains the following steps:

(1) Pre-processing, involving skew removal, etc. Pretty much

language-independent, though features like the shirorekha

might help here.

(2) Character extraction: Again, largely language-independent,

though language dependency might come in because of

features like shirorekha.

(3) Character identification: Language independent, maybe with

specialised plugins to take advantage of language features,

or items like known fonts.

(4) Post-processing, which involves things like spell-checking to

improve accuracy.

The current available version of Tesseract OCR does steps 3, and 4 above for any language. But that it can only do if it can do step 2 properly, which it cant for connected script like Hindi, Bengali etc. So the approach is to take the scanned image, apply some pre-processing to it, and then do the "maatraa clipping" operation on it. Now feed this image to Tesseract-OCR engine.

In detail, the things to do are:

(1) Pre-processing: Skew removal, Noise removal. Skew removal in particular is key for the "maatraa clipping" code to work.

(2) "maatraa clipping" : This enables the Tesseract-OCR engine to treat Devnagri connected script like any other script.

(3) Training: Very Important for getting good results. But well documented. Good tools exist for training Tesseract-OCR.

(4) Web Interface: We need to create a web interface so people can freely OCR their documents online. No big deal.

Now my intention is to implement skew removal using Hough transforms. Hough transforms are really good in finding staright lines (among other shapes) in images. So all we need to do is, find the "maatraas" and calculate thier slope. We have the skew angle, and we just rotate the page to correct the skew.

I had implemented "maatraa clipping" using projection based methods. It seems there is a better digital image processing method called "Morphological Operations" that is a better way of doing it. Well, actually i am not that sure about it yet. Still researching and trying out stuff.

Now, I had done all this work in C++, as the Tesseract-OCR code is also in C++. But, of late, i have been mesmerised by the simplicity and power of Python , and the Python image library. All the work i am doing now, including Hough transfroms, is in Python. So now we have 2 options:

(1) Do the pre-processing and "maatraa clipping" in Python and feed the page to the Tesseract-OCR (will be easy and quicker to implement)

(2) Do the entire thing in C++ (will execute much faster)

Again, we will probably end up doing both. In foss.in, I will probably bring along Python code that already works, and ask people to port it to C++ and merge upstream to TesseractIndic. Or we could ask people to implement algorithms of their choice in the language of their choice on a common set of test images and then shall convert that stuff to C++ and add.

Will go sleep now. This page will keep increasing in content on a daily basis now. So keep checking this.

PS: Special thanks to Mr. Sankarshan and Mr. Gora Mohanty for supporting me through out.

June 24 2008

Its 01:45 AM. Here is a mail i received from Mr. Gora Mohanty as a reply to my last post below on 23 June, and also to a mail i sent to the Aksharbodh mailing list:.

1. What were the issues with displaying Bengali fonts on Linux? Sample

images would help, as you do not give enough details to go on. People

on the indlinux-group mailing list (got to http://indlinux.org and the

Mailing Lists link towards the bottom to subscribe), and the Ankur

Bangla folk ought to be able to help you out here.

What GUI toolkit is tesseractTrainer.py using? Both gtk, and QT should

work fine for Bengali text, at least in any UTF-8 locale.

2. Could you give figures for % accuracy? Not all of us can read Bangla.

3. Is there any documentation on what training involves, and what kind

of training text you need? Could you the copious amount of Bangla text

in the GNOME/KDE .po files for Bengali?

Regards,

Gora

Ans 1) The issues with displaying bengali fonts in tesseractTrainer.py has mysteriously solved itself!! It does display Bengali text now. Here is a screenshot:

It shows that i can type and read in bengali anywhere on my linux installation now, including gedit, mozilla, terminal and tesseractTrainer.py.

Ans 2) The two test images below gave an accuracy of 89% and 85% respectively. These are not accurate, i just did a quick one time count of the errors. Some of the errors have occured because i havent trained the engine with the particular character yet, and some because i fed the wrong character.

Ans 3) Well, the entire training process is mentioned at http://code.google.com/p/tesseract-ocr/wiki/TrainingTesseract, but what i really need is an image, either scanned, or electronically rendered from some text editor, that contains as many samples of characters as possible, including conjuncts for every character, and then its box file. So the steps are:

1) Type all the possible characters+conjucts in a editor (for example, type ক কি কী কা কু ক then খ খা খী খি etc). Increase the font size a little bit.

2) Use a screen capture software, and from the image generated crop the part that contains the characters, and nothing else.

3) Convert the image to tif format. Then run it through tesseract downloaded from here using this command:

tesseract fontfile.tif fontfile batch.nochop makebox

where fontfile is the name of the image. This will create a new file named fontfile.txt. Change the extension to .box .

4) Now download tesseractTrainer.py, or bbtesseract and open this image in it and then edit the box files.

5) Mail the image and box file to me.

June 23 2008

Its 11:54 AM. Have been training Tesseract_indic with images with bengali text. A lot of time was spent fixing seg faults, memory alloc errors etc. It did slow me down. I also have my college placements to prepare for. So could not devote a lot of time.

Training was made difficult by the fact that tesseractTrainer.py did not display bengali fonts properly on linux, although i have the locales set properly and i can type in bengali anywhere on my linux installation. Initially i had to frequently swap between Windows and Linux, since i was using bbtesseract, a utility that edits box files for Tesseract training images. Both the utilities are very useful and i can imagine how hard it would have been otherwise.

The test results are poor as of now. I havent trained it properly, and the maatraa clipping code has to be improved for the results to be of acceptable quality.

Here are the three images i trained it with:

The corresponding box files are at http://tesseractindic.googlecode.com/files/imges_boxes.tar.gz .

If you want to help out by training it, download this, and then follow this.

The patches here helped a lot. I do not know why these havent been integrated into Tesseract. Also i managed to get a 1,52,000 words strong wordlist from Ankur bangla project. It will improve the accuracy a lot. Initially it had some some strange characters, but i used sed and merged all the words into one big file.

I need people to start submitting training data, either to me or to tesseract group. i will make a few changes to the maatraa clipping code and mail the patch to Ray Smith. Lets see what happens.

Initial results are here:

OCRed text:

খযাব শনবীর খরওজায়

মাওলানা েমাহাম্মদ অাব্দুল েমাতালিব খশহীদ

েক অাছ ভাইখ নবীর েপ্রমিক চল নিডয় অামাডক

সাত সমুদ্র েপড়িেয় যাব নূর নবীজির রওজােত া ঐ

পাপি অামি কি েয করি রওজায় য৮ব েনই েকা কড়ি (২ব৮রহ

পাখা যদি থাকত অামার ভর করিতাম পাখােত া ঐ

দয়াল নবী দয়ায় ভরা েপালােমের দাওেপা ধরা (২বারহ

মাওক নিনা অাডশক হডয় েকমডন থযাব জারIডত া ঐ

ওেগা নবী গেলর মালা দ্রহর কের খদাও দ্রমদ্রেনদ্রর খজাত াা (২বা রদ্রহ

ঠভাইদ্র দিডয় চরন তডল বনর কর অামাডক া ঐ

পবীর রাডত কনদি বডস নবীজিডক পাইব৮র ত াাডশ (২বারহঠ

নবীজির দানি রিডন চাইখনা েযডত জায়াডত া ঐ

অবীন শহীেদ ভােক েক যাও েতারা রওজা পােক (২বারহ

অামায় সােথ নিেয় চল যাব নবীর খজার া েত I ঐ

Accuracy: 89% approx

OCRed text:

জন গণ মন অধি নায়ক জয় েহ

ভ\রত ভIগ্য বিধাত\I

পঞ্জ\ব গুজর\ত মর\ঠ\

দ্রারিড় উহকল বতমা া

বিংধ্য হিম\চল য়মুIা গংা

উচ্ছল জদ্রনরি তরহপা া

তব মুভ নাহম জােগ মু

তব গুভ অ\াদ্রাধ ম\াগ ৮

গাাহ তব ড়াব গ\থাI

জন গণ মংলদ\য়ক জয় েহ

ভ\রত ভদ্রগ্য বিধাতাI

ভহয় ৮ঠহ া জয় চহ ই ভহয় াঠহা

জয় জহা ড়ায় জয় েহ ভ

Accuracy: 85 % approx

Ya ya i know. Its not that great. But its only going to get better, And i dint train it properly, so be cheerful!!

Go to http://code.google.com/p/tesseractindic for all the downloadable stuff.

(PS: Some of my friends seem to think i made this software. Well, for them, i would like to say this; i am trying to add a pinch of salt to an already cooked and fine meal. Nothing more and nothing less :) )

June 12 2008

Its 11:50 AM. Latest work done is here. Download is here. Patch here.

Next is training. The most important part. It will finally make it usable.

Tom from OCRopus/Tesseract has been kind enough to help me out with maatraa clipping after going through some of my work.

June 8, 2008

Its 11:40 PM. This is my first release of the Indic script supported Tesseract OCR engine. Download the tarred gz file or the patch if you already have the Tesseract 2.03.

The release is very much in its alpha stage right now. Infact, after downloading the archive, you will have to train the engine with your language of choice. Here is how to train it. I will soon add the complete archive, with training data for Bengali, and later for Hindi.

You *must* download this english training data for the engine to work in recognising english text. My advice is, wait for a few more days before i release a fully working version. I have also applied for sourceforge hosting space.

I will mail the patches to the Tesseract maintainers only after i have the training data ready.

And i have decided i will go to sleep by 4:30 AM everyday.

June 7, 2008

Its 7:54 AM. From now on i shall document everything in a pretty formal manner. Here is the algorithm for the maatraa clipping code.

In a few days i shall provide the diff file, ie the code itself.

June 3, 2008

Spent the entire night experimenting with the code. Its 05:28 hrs now. No big deal though. I usually sleep at 6:30 in the morning.

Made my first box file for the "national anthem" image. I first made it with the normal Tesseract engine. As expected, it classified whole words as blobs. Then made box files after adding my code, and I was delighted by the results. Here are related screen shots.

The first two images show boxes made by bbtesseract which uses box files generated by the generic Tesseract engine.

For all the boxes generated below, bbtesseract used the boxfiles generated by the modified Tesseract engine, which included the maatraa clipping code. The results are quite good. Character segmentation is good.

Below are the incorrect segmentations, which show flaws in the algorithm i came up with (which i am sure any other guy would also come up with, hence i am not boasting).

The image below suffers the same boxing problem. The solution to this problem is still not apparent, because i do not know how i can box the hossoi, since it overlaps the ordinate of the second letter. I will figure something out though :) .

Here is another problem, but the opposite of the other. Here the loop below the "ga" overlaps the ordinate of the 2nd letter, but in the bottom.

Same problem.

What really remains is training the engine, which is simple, and all of a sudden we have a working, free, Indic OCR engine!!

WIll sleep now, and update this page later.

May 27, 2008

I have had this fetish of working on digital image processing/OCR related projects since my 2nd year of college. That was when some professors from ISI Kolkata came down to our college for a workshop on DIP. Now, after 18 months or so, I finally did something in the direction.

Tesseract was developed by HP labs and then transferred to Google which open sourced it. Some parts of it are still proprietary, like the feature recognition algos, and also it is covered by the Apache license which is somewhat restrictive, but it was great for me to work on. The two main developers for Tesseract are Ray Smith and Luc Vicent, both legends, and engineers at Google.

Well, lets get down to the problem. Tesseract currently does not support connected scripts or handwritten text. Devnagri scripts such as Bengali and Hindi hava a matra (মাত্রো), which is like an underline, but in this case on top of the word, not under it. The Tesseract engine recognises machine print english script, but it relies on the gaps between successive characters in english to classify each character into a blob. Hence, theoretically, every isolated character is a blob.

The problem with classifying devnagri is that the matra (মাত্রো) connects the entire word and hence the entire character recognition system fails. To this end, solution could be found if the matras were clipped between two successive characters. That way, the same engine could look at each character as an isolated blob.

The steps involved were:

- Go through the Tesseract source code and identify the place where this could be added.

- Once the function(s) have been identified, think about the algorithm that would allow us to clip matras.

- The algorithm itself is as follows:

- Threshold the image. Tesseract already took care of this.

- Read each row of the image, starting from bottom(y=0). Note the black pixel count on each line.

- Find the line with the maximum black pixel count between 2 successive zones of 0 black pixel count. This line is the matra.

- Do the same for the entire page and store the Y co-ordinates of each such matra found.

- Now, take such a matra Y co-ordinate. Iterate over each X co-ordinate and note the number of pixels having a continuous run of white pixels . If this number is greater than 90% of the character width, the column is a region between two characters. Clip this column and the matra above it.

Black lines between successive characters signifies that these spaces have been marked for clipping

- Proceed in the same manner.

Here is a tif image. It contains India's national anthem in Bengali.

Here is the image after clipping the matras:

If you look carefully, you will see that the matras have been clipped between successive characters. Now, it is more or less ready to be fed to the Tesseract engine.

Now for the all important code. This is the only function i altered. It is in tesseract-2.03/ccmain/baseapi.cpp. I did not provide the diff file becuase i made some more changes in other parts that i am not sure of.

// Threshold the given grey or color image into the tesseract global

// image ready for recognition. Requires thresholds and hi_value

// produced by OtsuThreshold above.

void TessBaseAPI::ThresholdRect(const unsigned char* imagedata,

int bytes_per_pixel,

int bytes_per_line,

int left, int top,

int width, int height,

const int* thresholds,

const int* hi_values) {

IMAGELINE line;

page_image.create(width, height, 1);

line.init(width);

int count,count1=0,blackpixels[height-1][2],arr_row=0,maxbp=0,maxy=0,matras[100][3],char_height;

// For each line in the image, fill the IMAGELINE class and put it into the

// Tesseract global page_image. Note that Tesseract stores images with the

// bottom at y=0 and 0 is black, so we need 2 kinds of inversion.

const unsigned char* data = imagedata + top*bytes_per_line +

left*bytes_per_pixel;

for (int y = height - 1 ; y >= 0; --y) {

const unsigned char* pix = data;

for (int x = 0; x < width; ++x, pix += bytes_per_pixel) {

line.pixels[x] = 1;

for (int ch = 0; ch < bytes_per_pixel; ++ch) {

if (hi_values[ch] >= 0 &&

(pix[ch] > thresholds[ch]) == (hi_values[ch] == 0)) {

line.pixels[x] = 0;

break;

}

}

}

page_image.put_line(0, y, width, &line, 0);

data += bytes_per_line;

}

/////////DEBAYAN//////////////////

for(int y=0; y<height-1;y++){

count=0;

for(int x=0;x<width-1;x++){

if(page_image.pixel(x,y)==0)

{count++;}

}

if(count>0){

blackpixels[arr_row][0]=y;

blackpixels[arr_row][1]=count;

arr_row++;

}

}

blackpixels[arr_row][0]=blackpixels[arr_row][1]='\0';

for(int x=0;x<width-1;x++){ //Black Line

line.pixels[x]=0;

}

////////////line_through_matra() begins//////////////////////

count=1; cout<<"\nHeight="<<height<<" arr_row="<<arr_row<<"\n";

char_height=blackpixels[0][0]; //max character width per sentence

while(count<=arr_row){

//if(count==0){max=blackpixels[count][0];}

if((blackpixels[count][0]-blackpixels[count-1][0]==1) && (blackpixels[count][1]>=maxbp)){

maxbp=blackpixels[count][1];

maxy=blackpixels[count][0];

cout<<"\nMax="<<maxy<<" bpc="<<maxbp;

}

if((blackpixels[count][0]-blackpixels[count-1][0])!=1){

/////////////drawline(max)//////////////////////

cout<<"\nmax="<<maxy<<" bpc="<<maxbp;

// page_image.put_line(0,maxy,width,&line,0);

char_height=blackpixels[count-1][0]-char_height;

matras[count1][0]=maxy; matras[count1][1]=maxbp; matras[count1][2]=char_height; count1++;

char_height=blackpixels[count][0];

//////////// drawline(max)/////////////////////

maxbp=blackpixels[count][1];

}

count++;

}

matras[count1][0]=matras[count1][1]=matras[count1][2]='\0';

//delete blackpixels;

////////////line_through_matra() ends//////////////////////

////////////clip_matras() begins///////////////////////////

for(int i=0;i<100;i++){

if(matras[i][0]=='\0'){break;}

cout<<"\nY="<<matras[i][0]<<" bpc="<<matras[i][1]<<" chheight="<<matras[i][2];

count=i;

}

for(int i=0;i<=count;i++){

for(int x=0;x<width-1;x++){

count1=0;

for(int y=0;y<matras[i][2];y++){

if(page_image.pixel(x,matras[i][0]-y)==1){count1++;

for(int k=y+1;k<matras[i][2];k++){

if(page_image.pixel(x,matras[i][0]-k)==1){count1++;}

else{break;}

}

break;

}

}

cout<<"\nWPR @ "<<x<<","<<matras[i][0]<<"="<<count1;

if(count1>.8*matras[i][2] && count1<matras[i][2]){

line.init(matras[i][2]+5);

for(int j=0;j<matras[i][2]+5;j++)

{line.pixels[j]=1;}

cout<<"GA";

page_image.put_column(x,matras[i][0]-matras[i][2],matras[i][2]+5,&line,0);

}

}

}

page_image.write("bentest.tif");

////////////clip_matras() ends/////////////////////////////

/////////DEBAYAN/////////////////

}

Problems with the code:

- It assumes the image is perfectly straight. This assumption is obviously wrong, but Tesseract already has an inbuilt function to correct this. In any case because devnagri scripts have a matra, finding the angle of tilt is pretty simple.

- It run this "matra-clipping" code on all languages, which is totally wrong. One just has to add an "if-then-else" statement to make it run only for devnagri scripts such as hin_in, ben_in etc.

- I implemented the entire thing in one block of code. Will break it into a few functions.

- The algorithm has gaping flaws. Will plug em up.

- Many more, some i know, most i dont :)

Long term goal:

- Adding Devnagri script support to Tesseract, which includes making the above code error free, and then training Tesseract. As far as i understand, Tesseract developers are not concentrating on adding support to adding connected script support yet, hence i hope my work does not overlap with Google's work.

And i solemnly swear that i did not plagiarise/steal the code from anywhere, and i came up with the algo myself (which is why it is full of bugs) .

Honestly, kaam kar ke mazaa aa gaya yaar!!

(ps: What happened to aksharbodh? If anybody knows please tell me.)

http://hacking-tesseract.blogspot.com/2009/12/preliminary-results-for-tilt-method.html

http://hacking-tesseract.blogspot.com/2009/12/preliminary-results-for-tilt-method.html Original Image

Original Image